Recently, three papers published by students of IMR were accepted by the top international conference ICRA 2023, namely, "Long-Term Visual SLAM With Bayesian Persistence Filter Based Global Map Prediction," "CDFI: Cross Domain Feature Interaction for Robust Bronchi Lumen Detection" and "EgoHMR: Egocentric Human Mesh Recovery via Hierarchical Latent Diffusion Model".

The IEEE International Conference on Robotics and Automation (IEEE ICRA) is hosted annually by the IEEE International Society for Robotics and Automation. It is the premier international forum for authoritative researchers in the field of robotics to present their research.

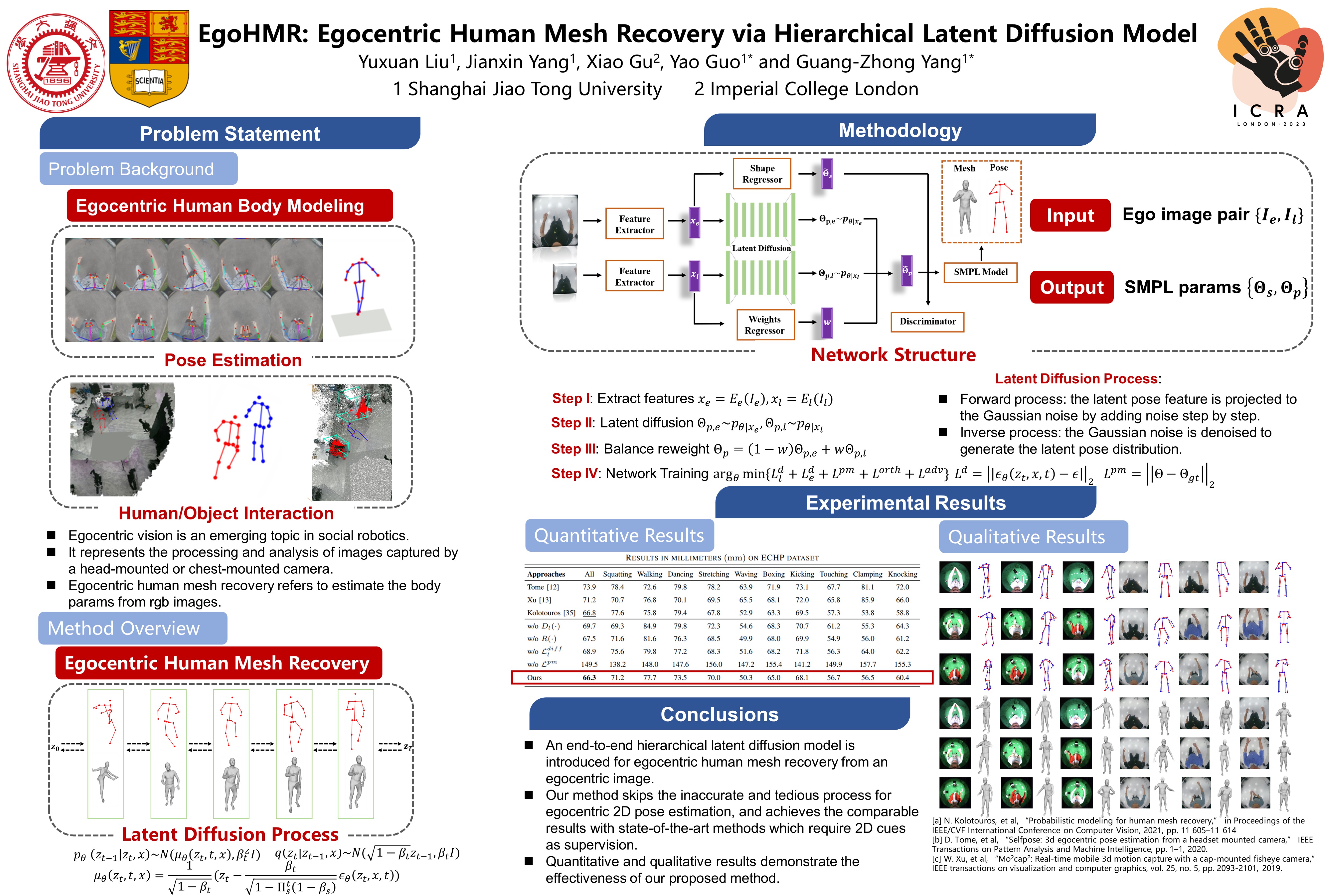

EgoHMR: Egocentric Human Mesh Recovery via Hierarchical Latent Diffusion Model

Yuxuan Liu-Professor Guang-Zhong Yang's team

Egocentric vision has gained increasing popularity in social robotics, demonstrating great potentials for personal assistance and human-centric behavior analysis. Holistic perception of human body itself is a prerequisite for downstream applications, including action recognition and anticipation. Extensive research has been performed for human mesh recovery from the exocentric images captured from a third person view, but limited studies are conducted for heavily distorted yet occluded egocentric images. In this paper, we propose Egocentric Human Mesh Recovery (EgoHMR), a novel hierarchical network based on latent diffusion models. Our method takes a single egocentric frame as the input and it can be trained in an end-to-end manner without supervision of 2D pose. The network is built upon the latent diffusion model by incorporating both global and local features in a hierarchical structure. To train the proposed network, we generate weak labels from synchronized exocentric images. The proposed method can perform human mesh recovery directly from egocentric images and detailed quantitative and qualitative experiments have been conducted to demonstrate the effectiveness of the proposed EgoHMR method.

Fig.1 Poster

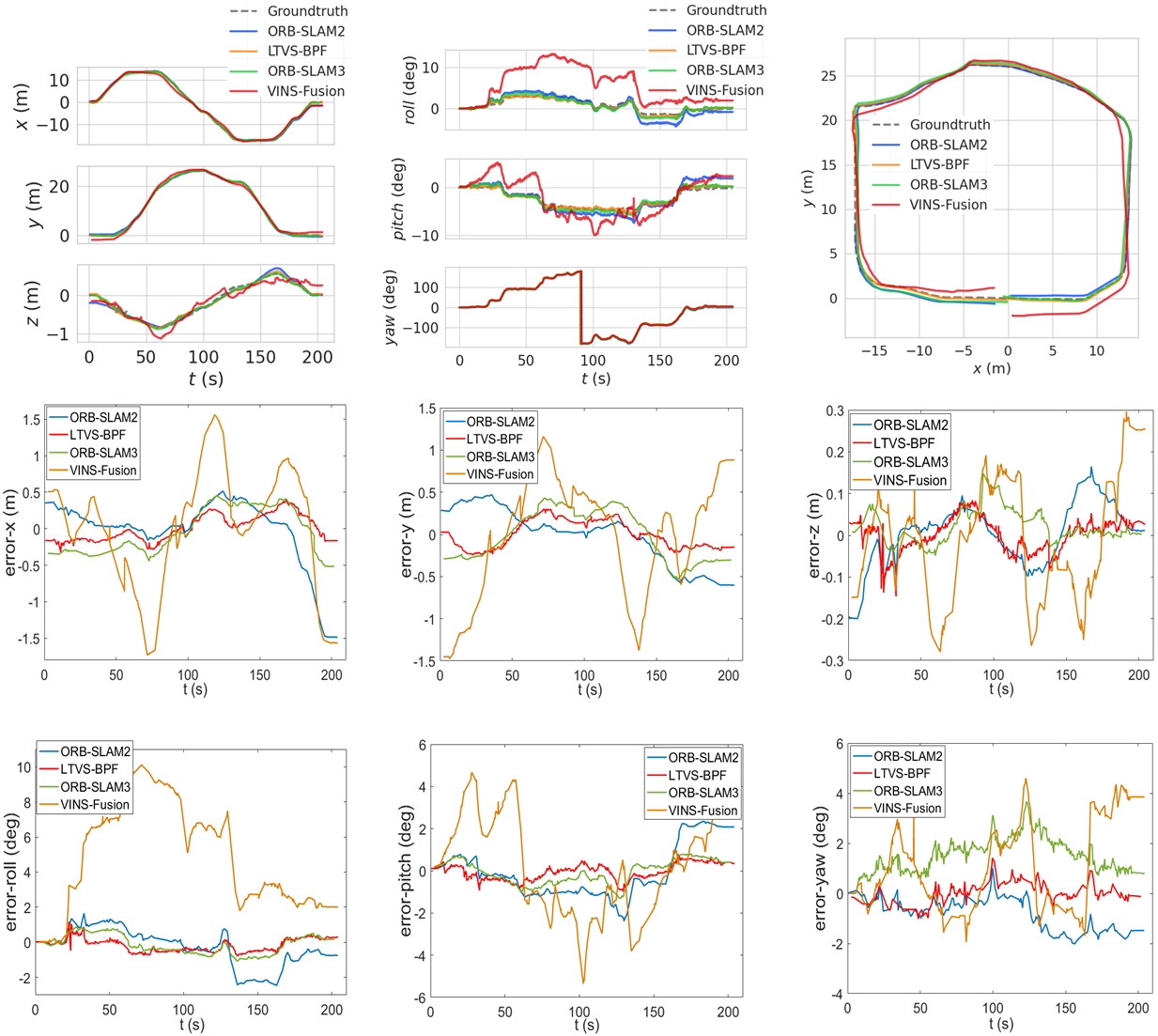

Long-Term Visual SLAM With Bayesian Persistence Filter Based Global Map Prediction

Tianchen Deng-Professor Weidong Chen's team

With the rapidly growing demand for accurate localization in real-world environments, visual SLAM has received significant attention in recent years. However, those existing methods still suffer from the degradation of localization accuracy in long-term changing environments. To address these problems, we propose a novel long-term SLAM system with map prediction and dynamics removal. First, a visual point cloud matching algorithm is designed to efficiently fuse 2D pixel information and 3D voxel information. Second, each map point is classified into three types: static, semi-static, and dynamic, based on the Bayesian persistence filter. Then we remove the dynamic map points to eliminate the influence of those map points. We can obtain a global predicted map by modeling the time series of semi-static map points. Finally, we incorporate the predicted global map into a state-of-art SLAM method, achieving an efficient visual SLAM system for long-term dynamic environments. Extensive experiments are carried out on a wheelchair robot in an indoor environment over several months. The results demonstrate that our method has better map prediction accuracy and achieves more robust localization performance.

Fig2, Result map

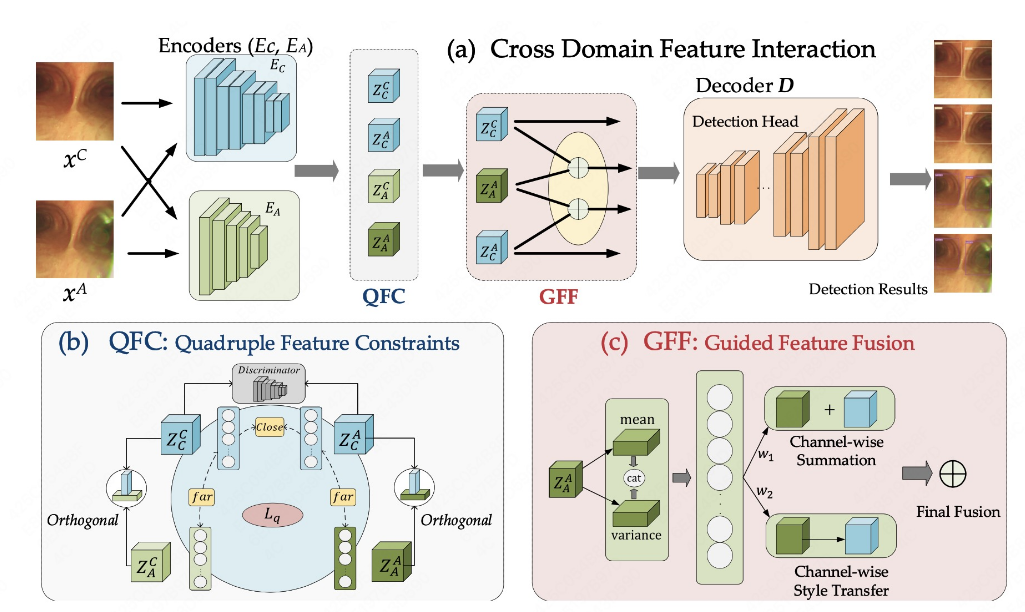

CDFI: Cross Domain Feature Interaction for Robust Bronchi Lumen Detection

Jiasheng Xu- Associate Professor Yun Gu's Team

Endobronchial intervention is increasingly used as a minimally invasive means for the treatment of pulmonary diseases. In order to reduce the difficulty of manipulation in complex airway networks, robust lumen detection is essential for intraoperative guidance. However, these methods are sensitive to visual artifacts which are inevitable during the surgery. In this work, a cross domain feature interaction (CDFI) network is proposed to extract the structural features of lumens, as well as to provide artifact cues to characterize the visual features. To effectively extract the structural and artifact features, the Quadruple Feature Constraints (QFC) module is designed to constrain the intrinsic connections of samples with various imaging-quality. Furthermore, we design a Guided Feature Fusion (GFF) module to supervise the model for adaptive feature fusion based on different types of artifacts. Results show that the features extracted by the proposed method can preserve the structural information of lumen in the presence of large visual variations, bringing much-improved lumen detection accuracy.

Fig3.Framework of the proposed Cros Domain Feature Interaction (CDF) method.